Can I trust this tool?

The most accurate AI-detector according to RAID benchmark

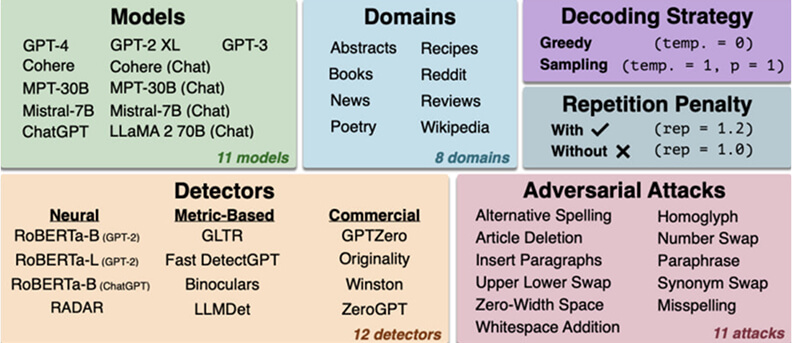

RAID is the biggest and most robust benchmark for AI-text detectors evaluation at the moment. It contains over 6 million text samples generated by 11 different models across 8 domains.

It’s AI took first place with more than 95.8% accuracy at 5% FPR, outperforming all other considered AI-detectors.

98% accuracy on GRiD, HC3, GhostBuster

GPT Reddit Dataset (GRiD) consists of context-prompt pairs sourced from Reddit, featuring responses generated by humans and generated by ChatGPT.

The HC3 (Human ChatGPT Comparison Corpus) dataset consists of nearly 40K questions and their corresponding human/ChatGPT answers.

The Ghostbusters dataset leverages the GPT-3.5-turbo model for generating texts in the domains of creative writing, news, and student essays.

On this dataset It’s AI took 98% accuracy and 96% f1-score, outperforming all considered in the paper detectors (commercial detectors weren’t considered there).

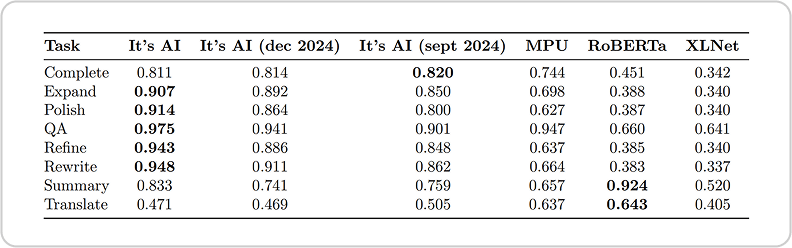

CUDRT — number one in 6/8 tasks

The CUDRT (Create, Update, Delete, Rewrite, and Translate) dataset is a comprehensive bilingual benchmark designed to evaluate AI-generated text detectors across multiple text generation scenarios.

In the CUDRT benchmark, only open-sourced solutions were considered and we took first place in 6 out of 8 tasks and on average outperformed the best-considered solution on 7% of F1 score.

It’s AI — new SOTA in AI-detection

Overall, It’s AI became a new SOTA (State-of-the-art) model and outperformed other detectors on all three of the considered benchmarks, therefore proving its consistency and reliability.

Read our detailed report of benchmarks scoring, measuring approaches and our metrics.

Ensure originality of your work